IMatch supports a range of AI service providers, both cloud-based commercial offerings and free locally installed AI services.

Click the AI service you plan to use for detailed information.

As explained below in more detail, the free software Ollama enables you to run powerful AI models on your PC at no cost (except for energy) and without privacy issues. Your images never leave your computer.

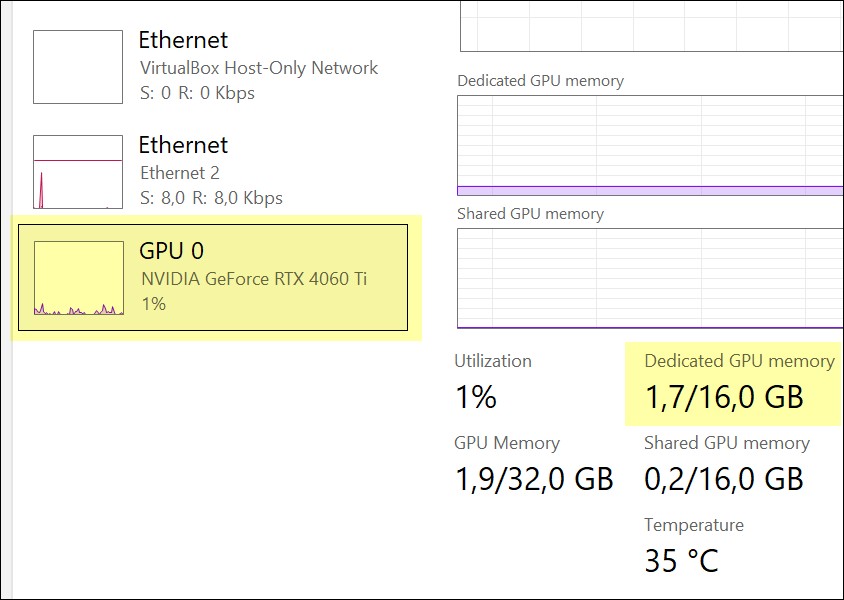

However, AI is very demanding when it comes to hardware, especially your graphics card. To run any of the modern models locally, you'll need a beefy graphics card with at least 8 GB VRAM on board; preferably, 16 GB VRAM.

Open Windows Task Manager with Shift + Ctrl + ESC and switch to the Performance tab. Click the GPU on the left to see how much memory your graphics card has:

If this counter shows less than 8 GB, it is not really up to the task of running AI locally. At least not with the models and AI available today.

The latest generation of models like OpenAI's GPT-5 or Qwen 3 are so-called reasoning models. They "think" before they answer, supposedly producing better results that way.

The thinking step is designed to help with complex problems like math, physics, and science. Not necessarily for improving descriptions and keywords, which is the use case for IMatch AutoTagger.

Before you use these (usually extra expensive and slow) models, run some tests to see if the longer response time and cost is worth it, giving you better descriptions, keywords, and traits for your images. This may be the case if you want to get exact species or landmark identification, chart analysis, and similar tasks.

At the time of writing (October 2025), the non-reasoning models like GPT-4.1, Qwen 2.5, or Gemma 3 often produce very similar results, but much faster.

According to the Ollama and LM Studio documentation, you can disable thinking by adding /no_think to the beginning or end of your prompt. This should reduce or even skip the thinking phase.

AutoTagger supports cloud-based AI models from companies like OpenAI, Google, and Mistral and models you can run locally using the free software Ollama or LM Studio.

| Modern AI Services These recommended services use large language models (LLMs) and prompts to produce descriptions and keywords. | |

|---|---|

OpenAI | A very good and popular service (ChatGPT) that IMatch AutoTagger can utilize to automatically add high-quality descriptions, keywords, and traits to images. Creating an API key is thankfully quite easy and the cost to use OpenAI models is affordable, even for personal use. To get started, follow the instructions on this page. Ask ChatGPT or Microsoft Copilot The above page allows you to sign up. Then follow the instructions to create an API key. See the OpenAI Models Overview for available models, costs, and rate limits. Open the AutoTagger configuration dialog box via Edit > Preferences > AutoTagger and select OpenAI as the AI. The model drop-down now lists all OpenAI models supported by AutoTagger. Create an API key for the model(s) you want to use.  Don't forget to configure your rate limits for OpenAI, which depend on your usage tier. See rate limits for more information. OpenAI frequently releases new models. We keep you informed via the IMatch release notes when we add support for new models to AutoTagger. OpenAI offers a cost-effective solution, even for personal use, and it works on any computer, without requiring a powerful graphics card or other special hardware. You can deposit $10 into your account and disable automatic renewal. This means when your balance is depleted, OpenAI will stop processing requests, and AutoTagger will display error messages. By disabling automatic renewal, you have full control over your spending. With just $10, you can automatically add descriptions and keywords to thousands of images. If you already have a 'pro' ChatGPT account, note that you'll still need to create a developer account in order to get an API key for IMatch to use. OpenAI keeps these separate. |

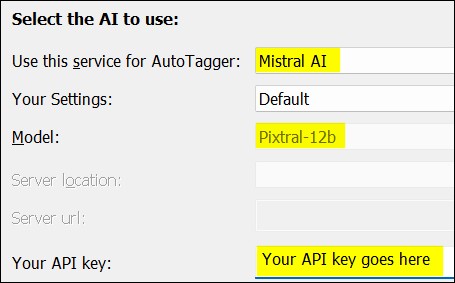

Mistral AI | Mistral is a company based in France. They are known for releasing many of their AI models for free to the open-source community, to support further enhancements in AI and to make AI technology available for local use (on our PCs). Creating an API key is thankfully quite easy and the cost to use Mistral models is affordable, even for personal use. To get started, follow the instructions on this page to create an account. Ask ChatGPT or Microsoft Copilot When you have an account, you can subscribe to a plan. For initial testing, subscribe to the free plan. No credit card needed. The free plan allows you to create an API key, which you can then use with AutoTagger. The free plan has (obviously) rather severe rate limits (requests that can be made per day and tokens used), but it works well enough to try out the results you can expect from Mistral. Open the AutoTagger configuration dialog box via Edit > Preferences > AutoTagger and select Mistral AI as the AI. The model drop-down now lists all Mistral models supported by AutoTagger. Create an API key for the model(s) you want to use.  The Pixtral model is the first model that has image understanding and reasoning. AutoTagger supports it. Mistral offers a cost-effective solution, even for personal use. And it works on any computer, without requiring a powerful graphics card or other special hardware. PrivacyMistral, as a European company based in France, adheres to the strict European data privacy rules and regulations. This can make Mistral the preferred choice for users—especially corporate users—who must follow the same rules or who consider privacy important. |

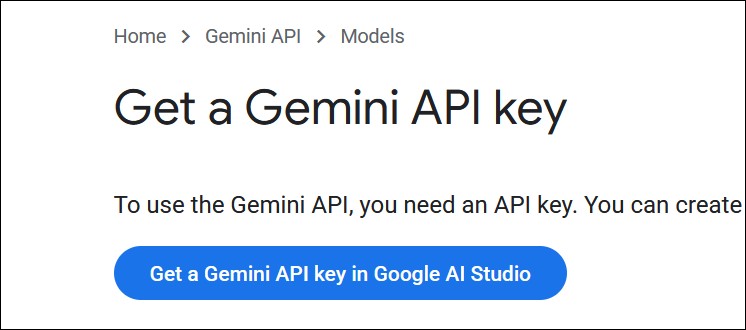

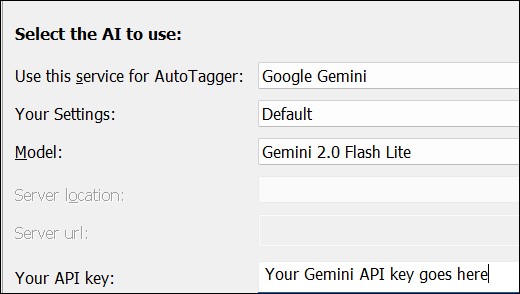

Google Gemini | To get started, follow the instructions to create an account and get a Gemini API key. The blue button starts the process.  Just sign in with your Google account (or create one) and follow the instructions. Create a project and you get the API key you need to enter in IMatch AutoTagger. Ignore all the other information about "setting up your API key". AutoTagger takes care of all of that. After getting your API key, select the Gemini AI in AutoTagger, add your API key, and you're ready to go:  At the time of writing (April 2025), Google Gemini excels at anything related to location (places, landmarks, tourist spots, well-known buildings), animal breeds, OCR, and many other things. If you autotag typical vacation and travel photos frequently, give the Gemini AI a test drive. Also, if you are interested in bird photography, cars, planes, boats, and similar, Gemini AI with the Flash Light model (very affordable) should produce outstanding results. Search for |

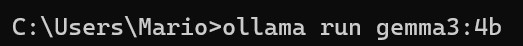

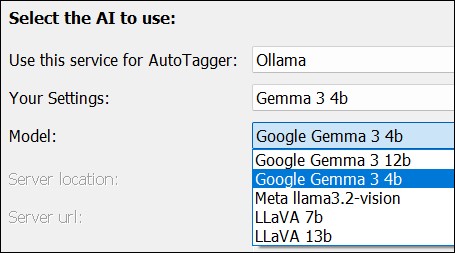

Ollama | IMatch Learning CenterThere is a free video tutorial showing how to install and use Ollama.Ollama is an open-source and free project that enables you to run artificial intelligence (AI) applications locally on your PC, without relying on cloud connectivity. Since your images never leave your computer, there are no privacy issues. With a moderately powerful graphics card (NVIDIA preferred) and at least 6 GB VRAM, Ollama can run smaller AI models like Gemma 3:4b or the LLaVA model efficiently. For running larger models, more VRAM (12 or more GB) is required. 1. Installing OllamaTo get started, visit the official Ollama website and download the installation package for Windows. Double-click the downloaded file to begin the installation process, which requires no administrator privileges. After installation, Ollama will appear in the Windows Taskbar and automatically start with Windows. This ensures that you can access Ollama quickly and easily. 2. Downloading a ModelAs explained in the AutoTagger Prompting help topic, Ollama requires a model to function. Fortunately, many free models are available for various applications, including image analysis, coding, and chat. For an overview of all available Ollama models, visit Ollama's Model Library page. For IMatch, only multimodal (vision-enabled) models are relevant. Installing the Google Gemma 3 ModelThis is a multimodal model released by Google in March 2025. It offers top-of-the-line vision processing, even for models reduced to 12b or 4b parameters. It supports 140 languages so you might get sensible and grammatically-correct descriptions, headlines and traits in languages other than English. The 4b variant needs about 5 GB RAM on the graphics card for optimal performance. For the 12b model, we recommend a graphics card with 16 GB RAM.

The model will begin downloading, which can take a while, depending on your internet speed. The Gemma 3:4b model is approximately 5 GB in size. Once the download completes, you're ready to start using Ollama with IMatch AutoTagger. Continue with the AutoTagger help topic to learn how to add descriptions, keywords, and traits to your images automatically. After the model has been downloaded, you can close the command prompt window. If your graphics card has 16 GB of VRAM, download the larger Gemma 12B model instead: AutoTagger has configurations for both the 4b and 12b variants and you can just create a new setting with the model of your choice:  If you find the results of Gemma 3 lacking for your type of photography, try one of the other vision-enabled models available for Ollama. Installing the Qwen 3 ModelQwen is a modern model released in the fourth quarter of 2025. We recommend giving it a try and see how well it works with your images. ollama run qwen3-vl:8bfor the 8b version and ollama run qwen3-vl:4bfor the smaller 4b model. Note: These are thinking/reasoning models, which take longer to produce better answers. According to the Ollama and LM Studio documentation, appending the term Installing the LLaVA Model (Outdated)The LLaVA model has been superseded by the Google Gemma and the Qwen models listed above. Another model with vision capabilities is the LLaVA model. The steps to download and install the LLaVA model are the same as the steps for the Gemma 3 model above.

If you have a powerful graphics card with at least 12 GB of VRAM, consider downloading the larger and more advanced LLaVA:13b model: Install the Meta Llama Vision ModelThis is a model released by Meta (Facebook, Instagram). It offers vision and image reasoning similar or even better than LLaVA. You can download and install it with Our tests show that this model is superior to the LLaVA model when it comes to producing descriptions and keywords in languages other than English. This model requires a graphics card with at least 12 GB of VRAM, 16 GB recommended. Ollama Settings in AutoTaggerIf you have downloaded multiple models, you can select them in AutoTagger:  |

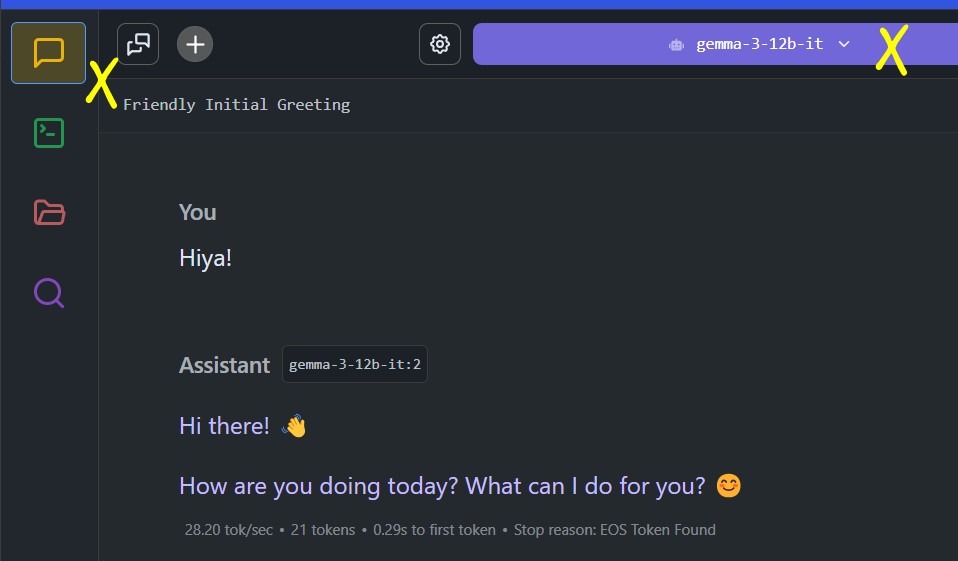

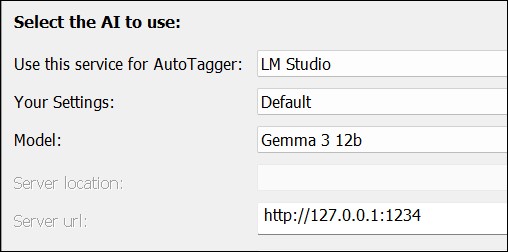

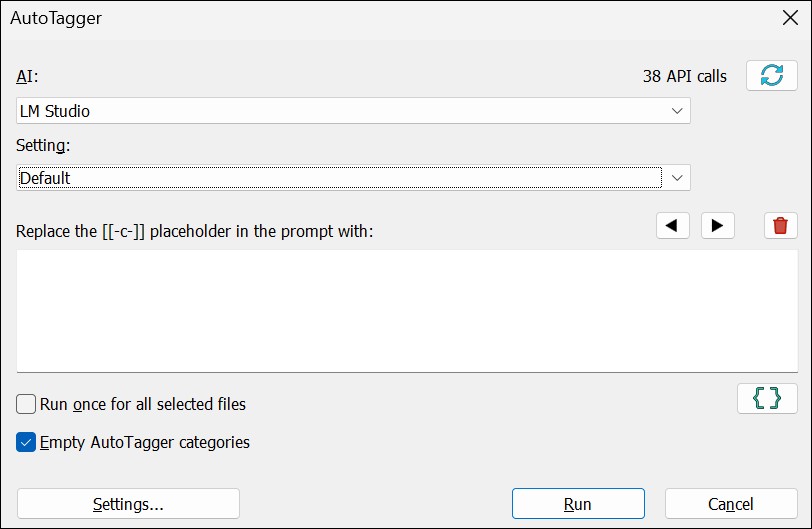

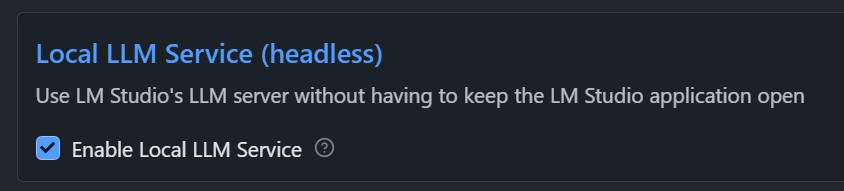

LM Studio | LM Studio is an open-source application that enables you to run powerful AI models locally on your PC. It is free for private use. Unlike Ollama, LM Studio has a comfortable user interface and is designed to be used both as an AI runner (like Ollama) and as an interactive tool to chat with the models installed in LM Studio. LM Studio is 'better' than Ollama when you also want to use AI models outside of IMatch. To download and install LM Studio on your PC, follow the easy-to-follow instructions on the LM Studio website. Here is the LM Studio documentation, which explains all features and usage in detail. Download the Gemma 3 ModelAt the time of writing, Google's Gemma 3 models are the most powerful models for image analysis available. They work very well with AutoTagger. After installing LM Studio, click the  This opens a screen where you can search for models. Search for the word  Download the Gemma 4B model if your graphics card has less than 12 GB VRAM; otherwise, download the Gemma 12B model for even better results. LM Studio automatically checks if a model is suitable for your PC and selects the available subtype (quantized versions) that works best on your PC. If a model will not run well on your PC due to hardware restrictions, you will be warned. Start the LM Studio ServerThis is the important part. The LM Studio server makes models accessible for other applications, like IMatch. At the bottom of the LM Studio app window, click on the Power User button:  Now click on the Developer button on the left:  Enable the server with the switch (1) and then click (2) and select the Gemma 3 model you have downloaded. Use the default settings for everything.  Have a ChatTo test the installation, create a new chat and ask Gemma 3 something:  Using LM Studio and Models in IMatch AutoTaggerAutoTagger is already configured to support LM Studio and suitable models. Not all models you can use with LM Studio are multimodal (vision-enabled). For AutoTagger, only vision-enabled models are usable, such as However, you can install any other model offered by LM Studio and use it for chatting, spelling and grammar checks, text generation, SEO, math, etc. The LM community makes suggestions regarding which models to use for each purpose. Things are changing rapidly, and new models are released frequently. Vision-enabled models are marked with this icon:  Open Edit menu > Preferences > AutoTagger and switch the AI to LM Studio. In the Model drop-down, select the Gemma 3 model available in LM Studio. We have installed the 12B Gemma 3 model in this case:  Check the prompt and any settings you need, then confirm the configuration with OK. Select one or more images in a File Window and start AutoTagger with F7. Select the LM Studio AI and the setting you want to use and run it:  Make LM Studio Run AutomaticallyClick on the Gear icon button at the bottom right of the LM Studio app window to open the settings. Scroll down until you see this option and enable it:  You can now use LM Studio models in AutoTagger without starting LM Studio manually. LM Studio automatically loads the model requested by AutoTagger. Using Qwen 3 with LM StudioTo use the supported Qwen models, install these models in LM Studio:

Using Mistral Ministral with LM StudioThis is the latest generation of models from Mistral in France. The models were released in December 2025 and are well worth trying. To use Ministral with AutoTagger, install any of these versions:

Ollama or LM Studio for Local AI?Ollama just works mostly in the background. Use it when you only want to work with AI models within IMatch. LM Studio allows you to chat and interact with locally installed AI models much like you would use cloud-based AI such as ChatGPT, Mistral, Copilot, or Gemini. This enables you to access AI capabilities and functions without any privacy issues or cost. |

| Classic AI Services These providers don't support the use of user-supplied prompts. | |

Google Cloud Vision | Google offers very rich and precise image tagging. If you already have a Google developer account for the IMatch Map Panel (Google Maps), you can add computer vision support with a few clicks in the Google Developer Console. |

Microsoft Azure Computer Vision | Microsoft provides computer vision services via their worldwide Azure cloud. The free tier gives you 1,000 images per month for free, but has some performance restrictions. Only 20 files can be processed per minute. If you already have a Microsoft developer account for the IMatch Map Panel (Bing Maps) you can add computer vision support with a few clicks in the Microsoft Developer Console. |

imagga | Popular European company (Bulgaria). Very simple to open an account. 1,000 processed images per month free. Strong European privacy laws and regulations apply. Imagga uses a two-part key. You enter it in the form |

Clarifai | Popular US-based service. It is very easy to open an account and you'll get 1,000 processed images per month for free. |

AI companies bill you per request and/or the number of tokens used. Pricing sometimes varies, depending on the model you use (more powerful models are more expensive).

Check the website of the AI vendor you use for pricing information.

All AI vendors provide billing information (requests made and tokens used in the current billing period) on their website. Keep an eye on that when you are using a subscription model with automatic renewal.

The AI vendor bills per request, usually in units like 1,000 requests (a.k.a. 1,000 images processed).

The AI vendor calculates the cost per request based on the number of tokens used for the prompt (input tokens) and the response (output tokens).

A good average is about 75 tokens per 100 characters in English text.

For example, the prompt "Describe this image in the style of a news headline" sent by AutoTagger to OpenAI produces 10 tokens (GPT-4o-mini model). The response "A close-up portrait of a majestic snowy owl sitting on a perch in a dimly lit studio, staring directly into the camera." has 26 tokens.

As of January 2025, OpenAI charges US$0.15 (fifteen cents) for one million input tokens and US$0.60 (sixty cents) for one million output tokens. Plus a small fee per request. Mistral AI charges US$0.15 per million tokens (input and output) for their Pixtral model.

These affordable rates mean that you can process many thousands of images for a few dollars. And save days or even weeks of work.

Billing metrics will vary quite a bit. Because your prompts may be longer or you may be using traits (more input tokens) or the AI returns more elaborate responses (more output tokens). Use the billing page of the AI vendor to keep track of the actual cost accumulated over the billing period.

AutoTagger lists the number of requests made and tokens used since the last reset. However, there may be differences in how the AI service counts these metrics. Always use the info displayed in your dashboard after logging into your service to see usage and pricing data.